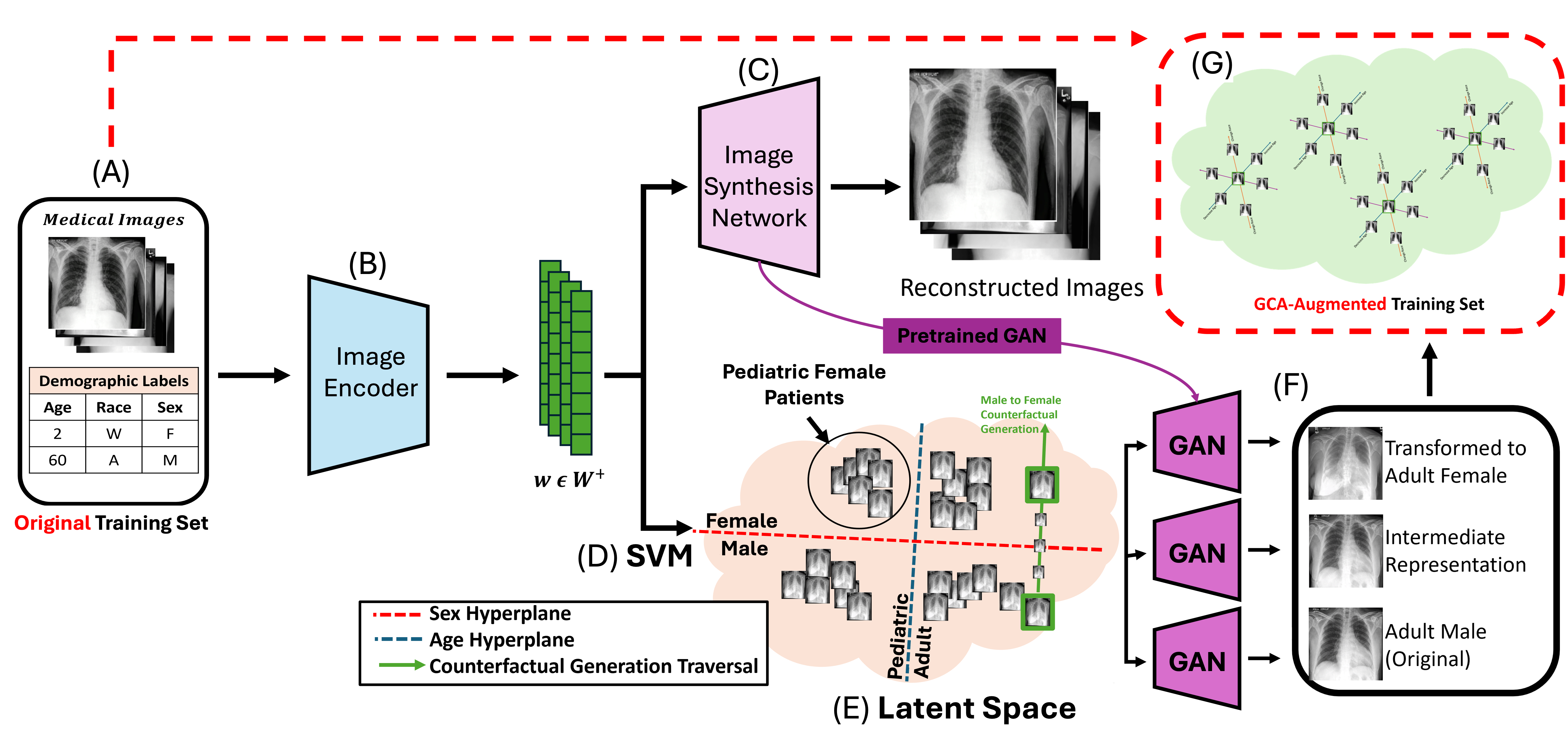

Figure: Overview of the GCA Framework

Figure: Overview of the GCA Framework

Deep learning (DL) models trained for chest x-ray (CXR) classification can encode protected demographic attributes and exhibit bias towards underrepresented patient populations. In this work, we propose Generative Counterfactual Augmentation (GCA), a framework for mitigating algorithmic bias through demographic-complete augmentation of training data. We use a StyleGAN3-based synthesis network and SVM-guided latent space traversal to generate structured age and sex counterfactuals for each CXR while preserving disease features. We extensively evaluate GCA for training DL models with the RSNA Pneumonia dataset using controlled underdiagnosis bias injection across age- and sex-groups at varying rates. Our results show up to 23% reduction in FNR disparity, with a mean reduction of 9%, across varying rates of underdiagnosis bias. When evaluated with the external CheXpert and MIMIC-CXR datasets, we observe a consistent FNR reduction and improved model generalizability. We demonstrate that GCA is an effective strategy for mitigating algorithmic bias in DL models for medical imaging, ensuring trustworthiness in clinical settings.

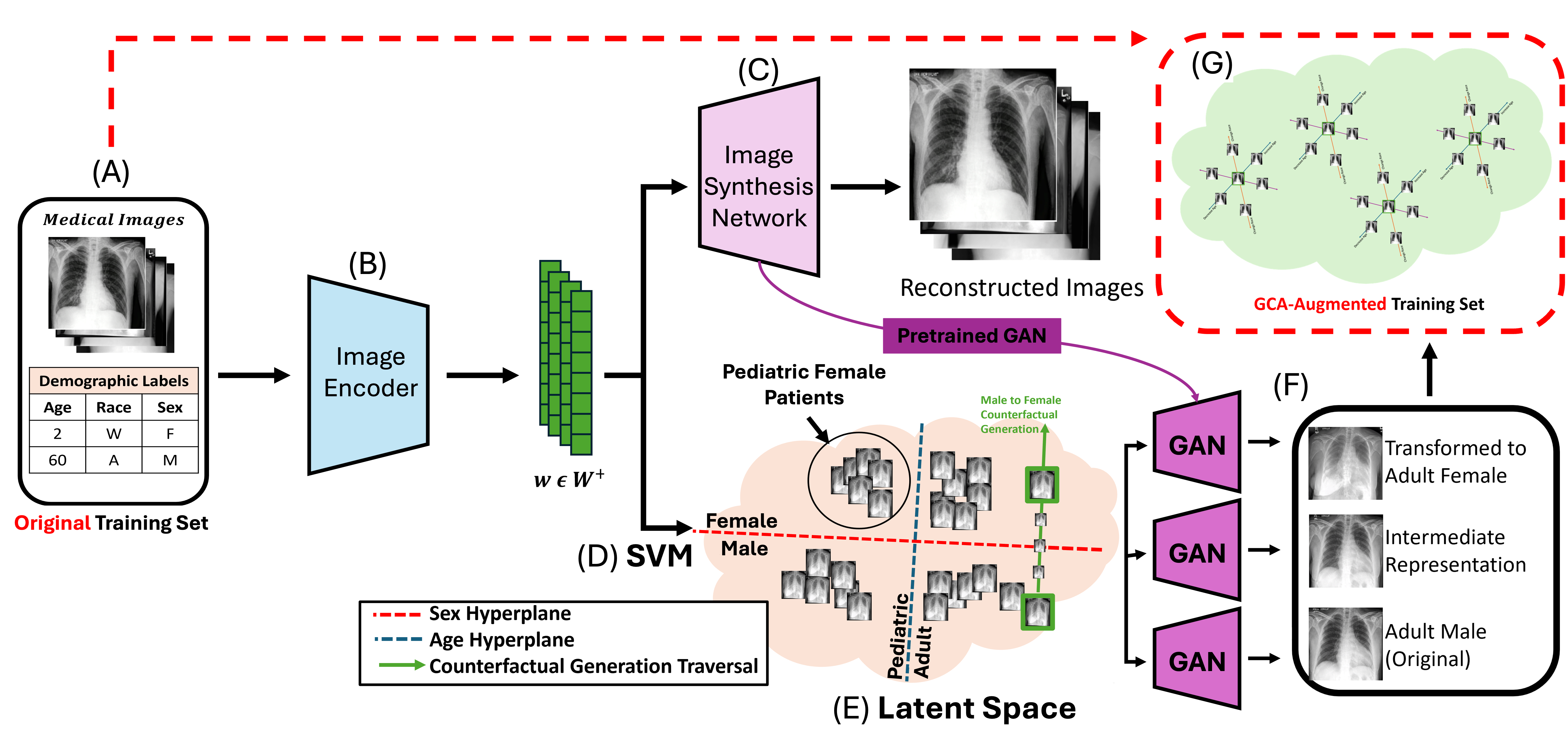

Figure: Examples of GCA counterfactuals generated by SVM-guided latent space traversal for sex (top) and age (bottom) attributes.

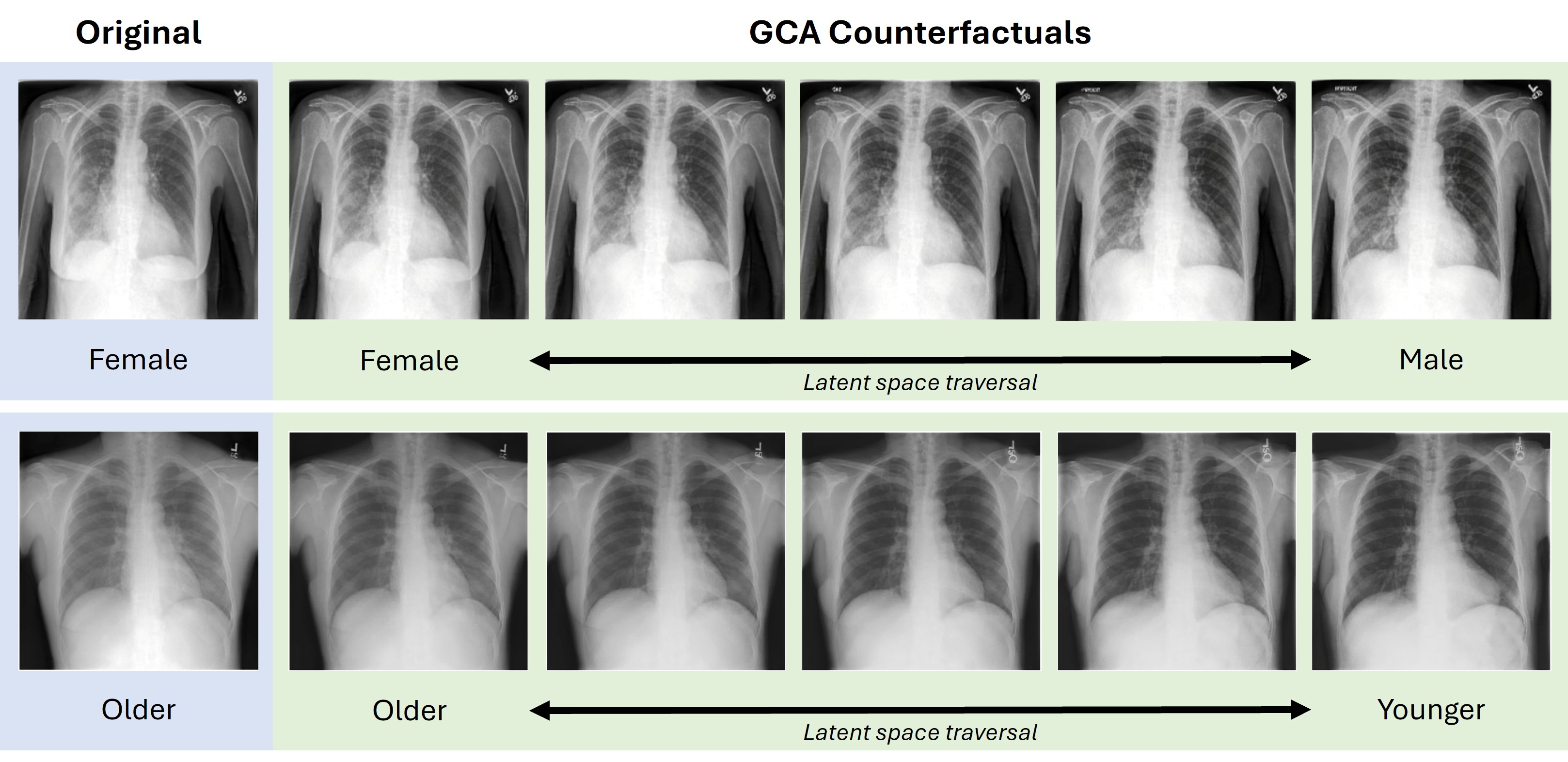

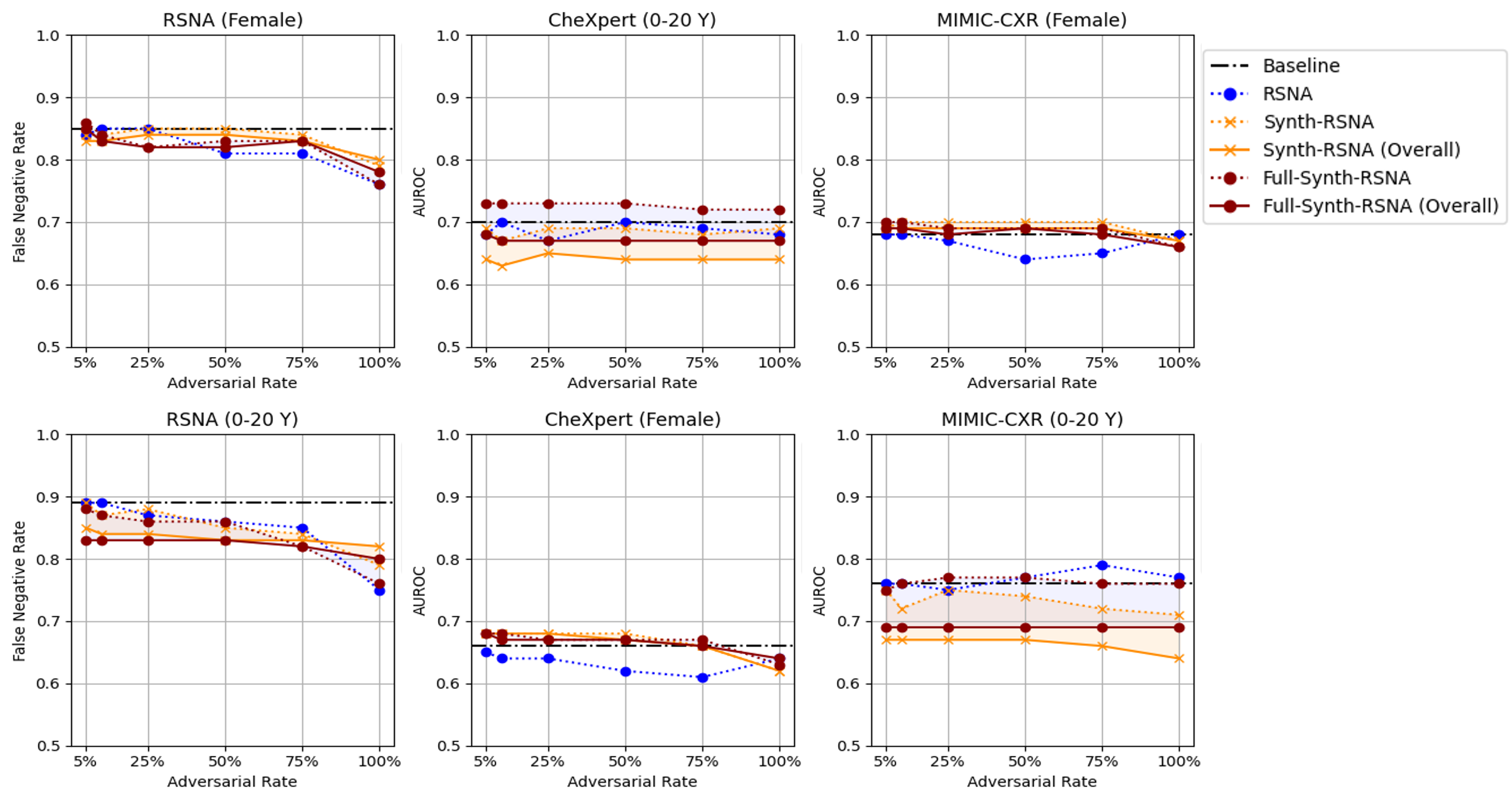

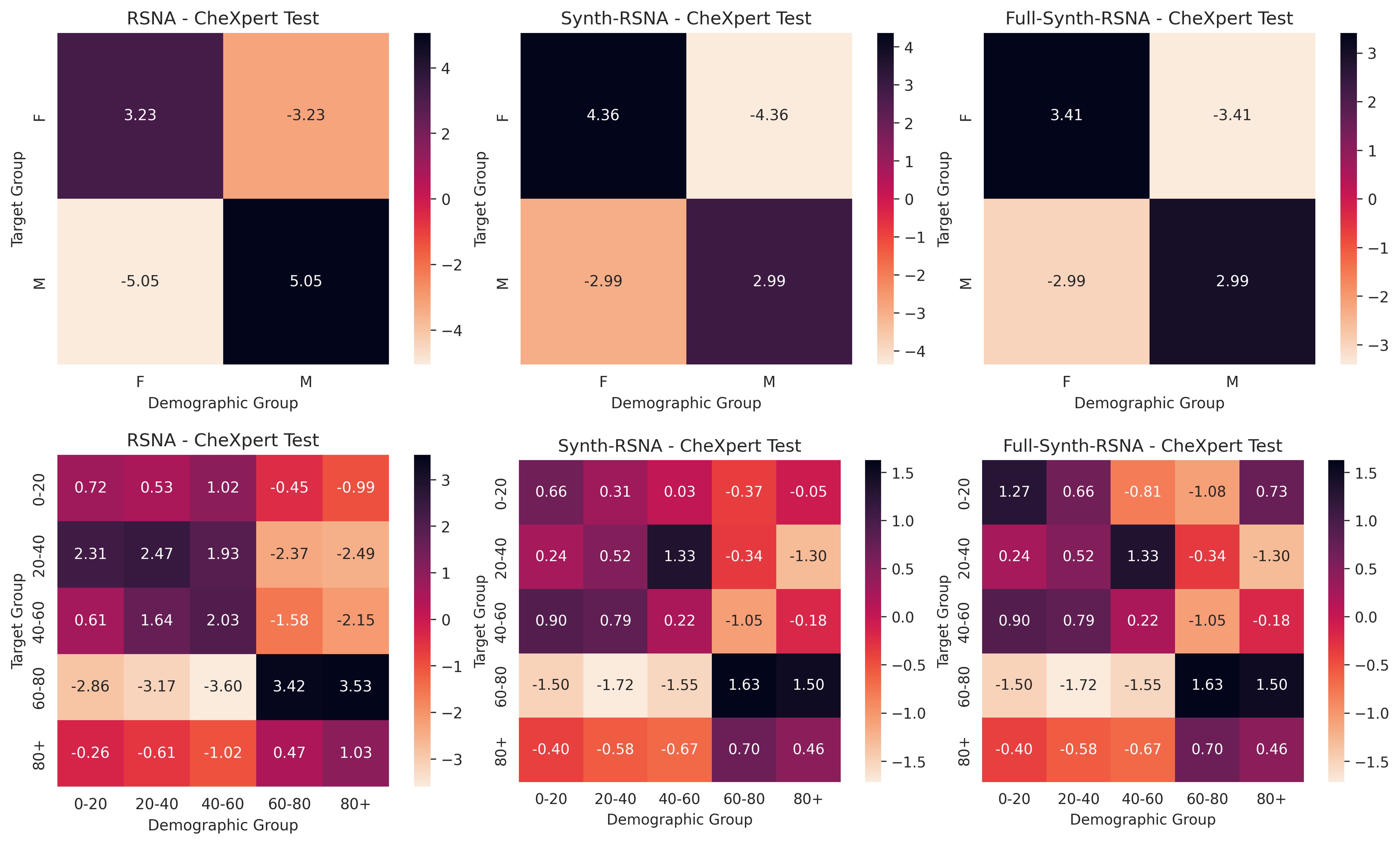

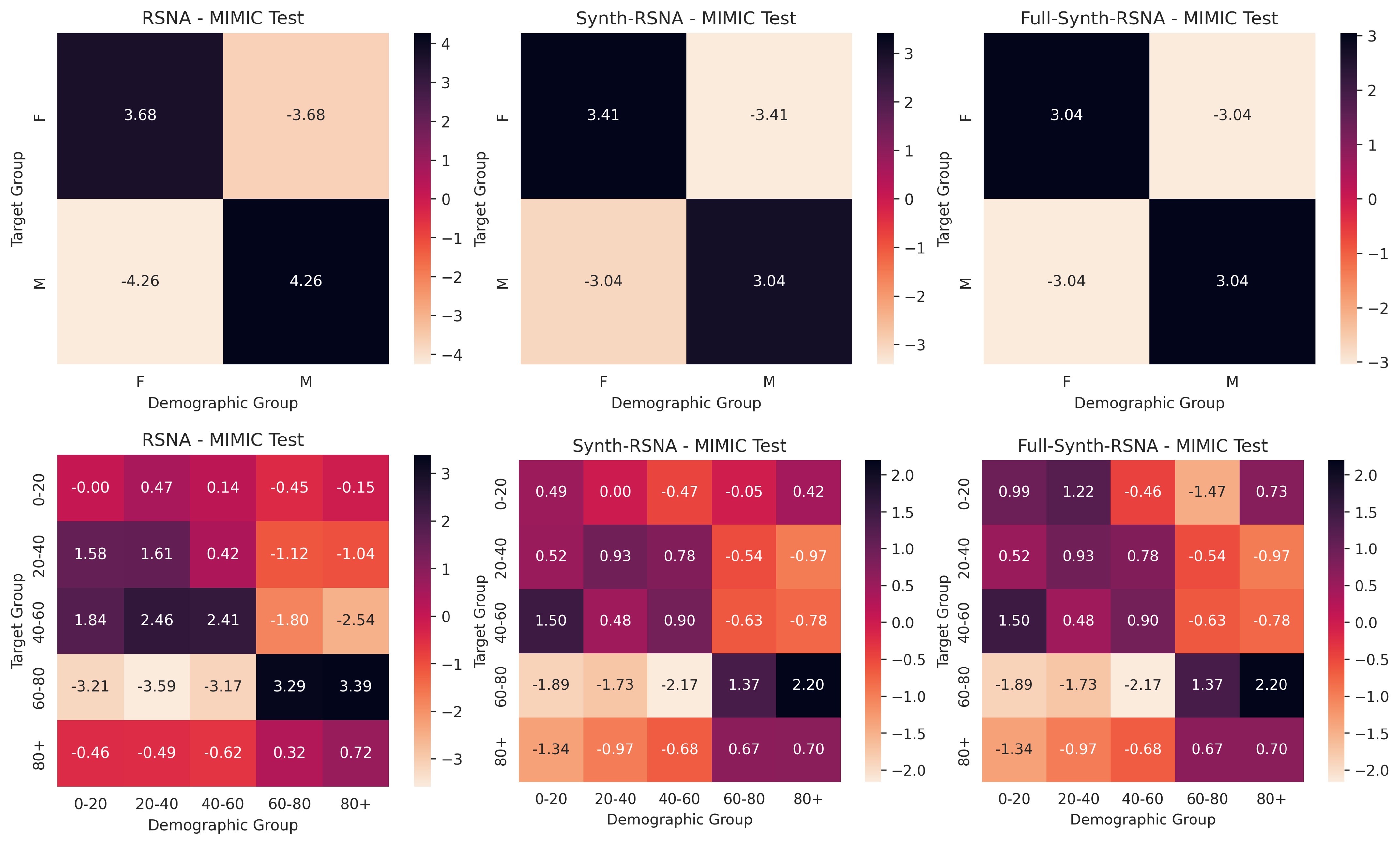

We evaluated the impact of controlled bias injection on DenseNet121 FNR and AUROC performance. Models trained on the original (RNSA) and demographically targeted synthetic (Synth-RSNA) and demographic-complete synthetic (Full-Synth-RSNA) RSNA datasets were tested on the RSNA, CheXpert, and MIMIC-CXR test sets.

Figure: FNR for targeted demographic groups compared to the overall DenseNet121 model’s FNR.

Figure: AUROC for targeted demographic groups compared to the overall DenseNet121 model’s AUROC.

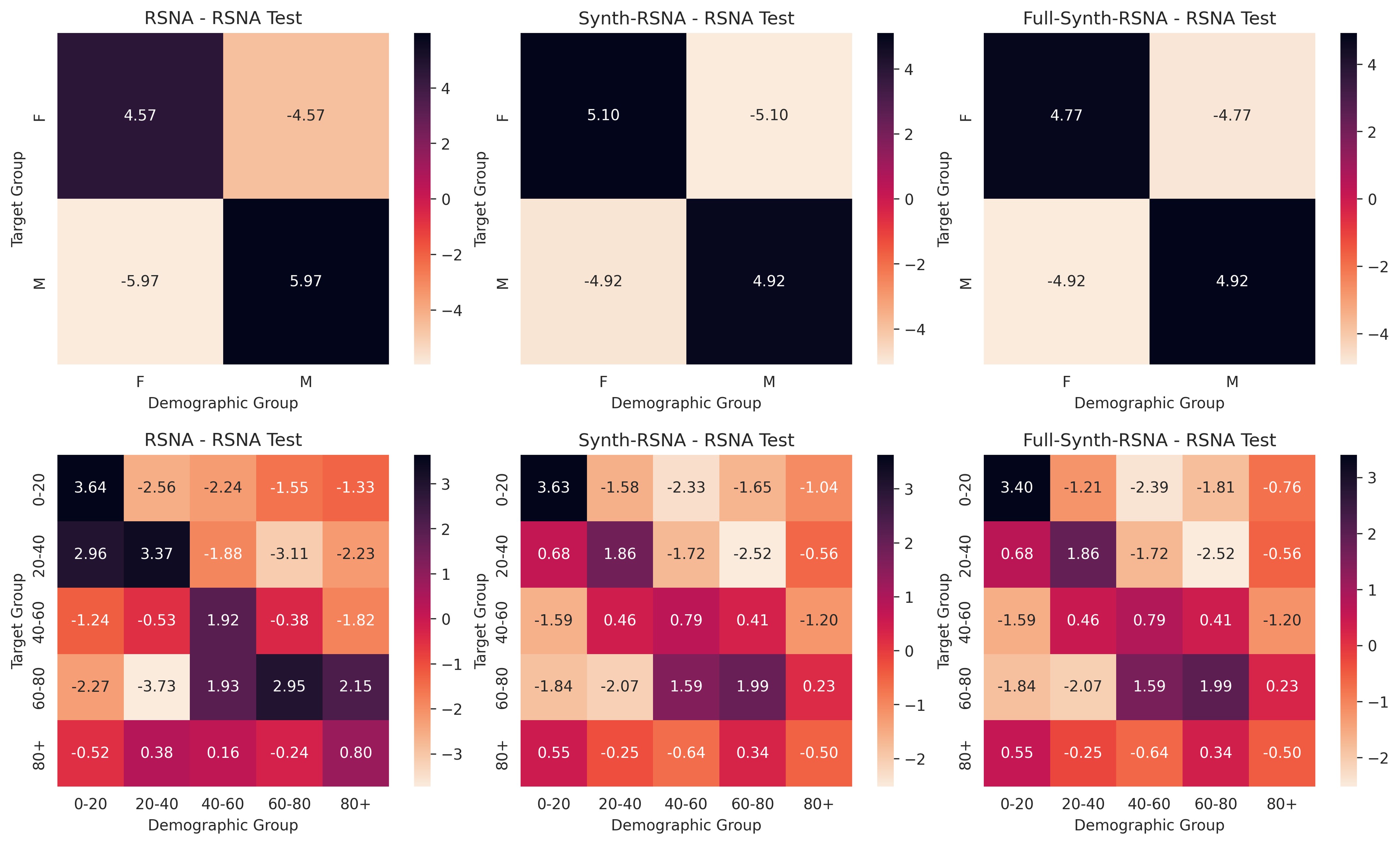

GCA improves robustness on targeted groups without adversely affecting accuracy on non-targeted groups. When GCA is applied, Vulnerability (ν), for 0-20Y decreases considerably, from ν = 2.96 to ν = 0.68 (∆ = −2.28) for both Synth-RSNA-Age and Full-Synth-RSNA.

Figure: Vulnerability of the targeted and non-targeted demographic groups for models trained on RSNA (column 1), Synth-RSNA (column 2), Full-Synth-RSNA (column 3) datasets and tested on RSNA test set.

Figure: Vulnerability of the targeted and non-targeted demographic groups for models trained on RSNA (column 1), Synth-RSNA (column 2), Full-Synth-RSNA (column 3) datasets and tested on chexpert test set.

Figure: Vulnerability of the targeted and non-targeted demographic groups for models trained on RSNA (column 1), Synth-RSNA (column 2), Full-Synth-RSNA (column 3) datasets and tested on MIMIC-CXR test set.

GCA offers a scalable and effective framework for mitigating algorithmic bias in DL models through structured counterfactual generation. Our findings suggest that GCA not only improves model fairness and robustness but also has the potential to be adapted for other imaging modalities and tasks, ensuring trustworthiness in clinical settings.

@InProceedings{Uwaeze_2025_ICCV,

author = {Uwaeze, Jason and Kulkarni, Pranav and Braverman, Vladimir and Jacobs, Michael A. and Parekh, Vishwa S.},

title = {Generative Counterfactual Augmentation for Bias Mitigation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops},

month = {October},

year = {2025},

pages = {1153-1160}

}